Prepared statement java memory leak

Developers feel more convenience in using PreparedStatement objects for sending SQL statements to the database. When PreparedStatement object is given a sql statement, it gets sent to DB and gets compiled immediately. As a result when a PreparedStatement is executed, the DB just run the PreparedStatement SQL statement without having to compile it first. One more advantage is you can also use the sql statement in parameterized fashion i.e different values each time you execute same statement.

A good explanation can be found at Using Prepared Statements by Oracle.

Here we will discuss about a out of memory error in one of our production servers where major(87.54%) of the JVM was occupied by instances of “oracle.jdbc.driver.T4CPreparedStatement”.

15,507 instances of "oracle.jdbc.driver.T4CPreparedStatement", loaded by "org.apache.catalina.loader.StandardClassLoader @ 0x6fffbb5e8" occupy 2,984,349,056 (87.54%) bytes.

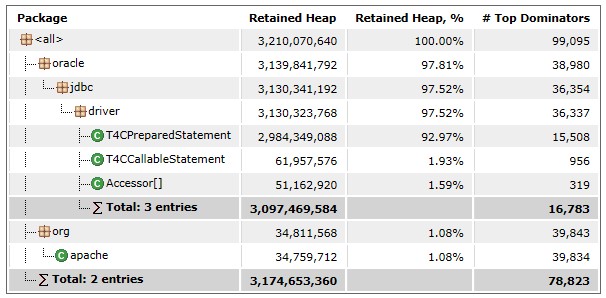

We used eclipse MAT tool to analyze the heap dump. Please find the analysis below:

Large number of prepared statements in JVM getting cached outgrowing the amount of memory available to the JVM causing Java heap exhaustion and ultimately OOM. This is a typical scenario in case of high concurrency with limited resources.

Below are the Memory leak suspects which shows 87.54% of total heap is taken via large number of Prepared Statements .

15,507 instances of "oracle.jdbc.driver.T4CPreparedStatement", loaded by "org.apache.catalina.loader.StandardClassLoader @ 0x6fffbb5e8" occupy 2,984,349,056 (87.54%) bytes. Biggest instances: oracle.jdbc.driver.T4CPreparedStatement @ 0x75e9f2bc0 - 38,022,808 (1.12%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x74ae4dcc0 - 38,021,520 (1.12%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x75323dd50 - 38,021,520 (1.12%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x7be4ff4d8 - 38,021,520 (1.12%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x708134800 - 38,021,080 (1.12%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x72103f828 - 37,864,440 (1.11%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x723c48fb0 - 37,864,440 (1.11%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x7344dbf48 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x7448f7690 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x750fc18e0 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x759268f68 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x7622b8908 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x77b425598 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x787359640 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x78cf1e900 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x79b4075a0 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x7a5aee0d0 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x7aae815b0 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x7af9ddad0 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x7b6092fd8 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x7c0e7ceb8 - 35,757,696 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x792337110 - 35,756,832 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x7a47618d8 - 35,756,832 (1.05%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x72bfaa4a8 - 35,051,440 (1.03%) bytes. oracle.jdbc.driver.T4CPreparedStatement @ 0x76da44760 - 35,051,440 (1.03%) bytes. These instances are referenced from one instance of "java.util.concurrent.ConcurrentHashMap$Segment[]", loaded by "<system class loader>" Keywords java.util.concurrent.ConcurrentHashMap$Segment[] org.apache.catalina.loader.StandardClassLoader @ 0x6fffbb5e8 oracle.jdbc.driver.T4CPreparedStatement

Dominator tree shows that there were huge number of requests which caused the prepared statement cache to increase huge number and getting out of memory.

This kind of situation happens due to mainly 2 reasons:

- Prepared statement java memory leak

- Extreme concurrency with inadequately sized JVM

In one of the use case we had seen that application was going through load test which fires thousands of sql queries to database via application work flows. In these cases you might see this error quite often where the JVM will go outofmemory or will stop responding to any further requests. Though there was no memory leaks but with 3GB JVM Xmx size was inadequate to run 200 concurrency for a app tasks which were very resource intensive.

In case of doubts on code related memory leaks its better to take a heap dump during OOM and start tracing by getting the actual SQL from the leaked cursors and looking in code for where that SQL is created. A very common cause of leaked cursors are like missing to use .close() :

Example:

try {

PreparedStatment stmt = null;

stmt = con.prepareStatement("FIRST SQL");

----more code---

----more code---

stmt = con.prepareStatment("SECOND SQL"); //Here the FIRST SQL got leaked was never closed.

----more code---

----more code---

} finally {

stmt.close() //Only the SECOND SQL got closed

}

If you are sure there are no memory leaks like above in code then you can try below settings to improve performance and can reduce Prepared statement java Out Of Memory errors.

We had done below changes to keep the OOM errors under control:

Assumptions:

App is running on Apache Tomcat with Oracle as backend database.

Tomcat Changes :

1. In Tomcat\conf\context.xml files JNDI connection pool maxActive was increased and validationQuery was set to “select 1 from dual” instead of “select * from dual”

<Resource auth="Container" driverClassName="oracle.jdbc.OracleDriver" initialSize="5" maxActive="500" maxIdle="5" maxWait="5000" name="jdbc/oracle/UAT" password="******" poolPreparedStatements="true" type="javax.sql.DataSource" url="jdbc:oracle:thin:@uat:1521:UATDB" username="UAT_USER" validationQuery="select 1 from dual"/></Context>

2. In Tomcat\conf\server.xml file Connector configuration maxThreads were increased to 500 from 150 default.

<Connector port="5269" protocol="AJP/1.3" redirectPort="8443" SSLEnabled="false" tomcatAuthentication="false"/>

to

<Connector port="5269" protocol="AJP/1.3" redirectPort="8443" SSLEnabled="false" tomcatAuthentication="false" maxThreads="500"/>

3. In Tomcat\conf\web.xml file session-timeout was set to 60 minutes from old 8hrs.

<session-config> <session-timeout>480</session-timeout> </session-config>

to

<session-config> <session-timeout>60</session-timeout> </session-config>

4. JVM Arguments to use -XX:+UseParNewGC with -XX:+UseConcMarkSweepGC in regedit or JVM options

4.a. Navigate to HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\Apache Software Foundation\Procrun 2.0\UAT_TomcatService\Parameters\Java\Options key

-XX:SurvivorRatio=8 -XX:TargetSurvivorRatio=90 -XX:MaxTenuringThreshold=15 -XX:+UseBiasedLocking -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:+CMSIncrementalMode -Dsun.rmi.dgc.client.gcInterval=3600000 -Dsun.rmi.dgc.server.gcInterval=3600000 -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=D:\OOM\log

4.b. JVM Xmx values were also increased to improve performance.

5. Sizing the maxCachedBufferSize for JDBC drivers performance:

Oracle excepts says that each Statement (including PreparedStatement and CallableStatement) holds two buffers, one byte[] and one char[]. The char[] stores all the row data of character type: CHAR,VARCHAR2, NCHAR, etc. The byte[] stores all the rest. These buffers are allocated when the SQL string is parsed, generally the first time the Statement is executed. The Statement holds these two buffers until it is closed.

Since the buffers are allocated when the SQL is parsed, the size of the buffers depends not on the actual size of the row data returned by the query, but on the maximum size possible for the

row data. After the SQL is parsed, the type of every column is known and from that information the driver can compute the maximum amount of memory required to store each column. The driver also has the fetchSize, the number of rows to retrieve on each fetch. With the size of each column and the number of rows, the driver can compute the absolute maximum size of the data returned in a single fetch. That is the size of the buffers.

Example:

CREATE TABLE TAB (ID NUMBER(10), NAME VARCHAR2(40), DOB DATE) ResultSet r = stmt.executeQuery(“SELECT * FROM TAB”);

When the driver executes the executeQuery method, the database will parse the SQL. The database will report that the result will have three columns: a NUMBER(10), a VARCHAR2(40),

and a DATE. The first column needs (approximately) 22 bytes per row. The second column needs 40 chars per row. The third column needs (approximately) 22 bytes per row. So one row needs 22 + (40 * 2) + 22 = 124 bytes per row. Remember that each character needs two bytes. The default fetchSize is 10 rows, so the driver will allocate a char[] of 10 * 40 = 400 chars (800 bytes) and a byte[] of 10 * (22 + 22) = 440 bytes, total of 1240 bytes. 1240 bytes is not going to cause a memory problem. Some query results are bigger. In the worst case, consider a query that returns 255 VARCHAR2(4000) columns. Each column takes 8k bytes per row. Times 255 columns is 2040K bytes or 2MB per row. If the fetchSize is set to 1000 rows, then the driver will try to allocate a 2GB char[]. This would be bad.

Oracle JDBC Drivers:

The 11.1.0.7.0 drivers introduce a connection property to address the large buffer problem. This property bounds the maximum size of buffer that will be saved in the buffer cache. All larger

buffers are freed when the PreparedStatement is put in the Implicit Statement Cache and reallocated when the PreparedStatement is retrieved from the cache. If most PreparedStatements require a modest size buffer, less than 100KB for example, but a few require much larger buffers, then setting the property to 110KB allows the frequently used small buffers to be reused without imposing the burden of allocating many maximum size buffers. Setting this property can improve performance and can even prevent OutOfMemoryExceptions.

The connection property is oracle.jdbc.maxCachedBufferSize in bytes and this can be used as a JVM argument using -Doracle.jdbc.maxCachedBufferSize=112640

Few other things to look at are:

oracle.jdbc.useThreadLocalBufferCache and oracle.jdbc.implicitStatementCacheSize settings. A good read can be found at oracle database memory PDF from Oracle.

In case of any ©Copyright or missing credits issue please check CopyRights page for faster resolutions.